- Publication: Situational-Awareness.AI

- Publication Date: 6/4/2024

- Organizations mentioned: OpenAI, Google, Microsoft, Anthropic, RAND Corporation

- Publication Authors: Leopold Aschenbrenner

- Technical background required: Medium

- Estimated read time (original text): 180 minutes

- Sentiment score: 55%, neutral

Goal:

Leopold Aschenbrenner, an AI researcher and analyst, authored this report to provide a comprehensive analysis of the potential development of artificial general intelligence (AGI) and superintelligence by the end of the 2020s. The report aims to raise awareness about the rapid advancement of AI technology, its implications for national security.

Methodology:

- The author analyzes current trends in AI development, focusing on the scaling of compute power, algorithmic efficiencies, and “unhobbling” gains in AI capabilities.

- Historical precedents, such as the Manhattan Project, are used to draw parallels and insights for the potential development of AGI.

- The report combines technical analysis, economic modeling, and geopolitical considerations to present a holistic view of the AGI landscape.

Key findings:

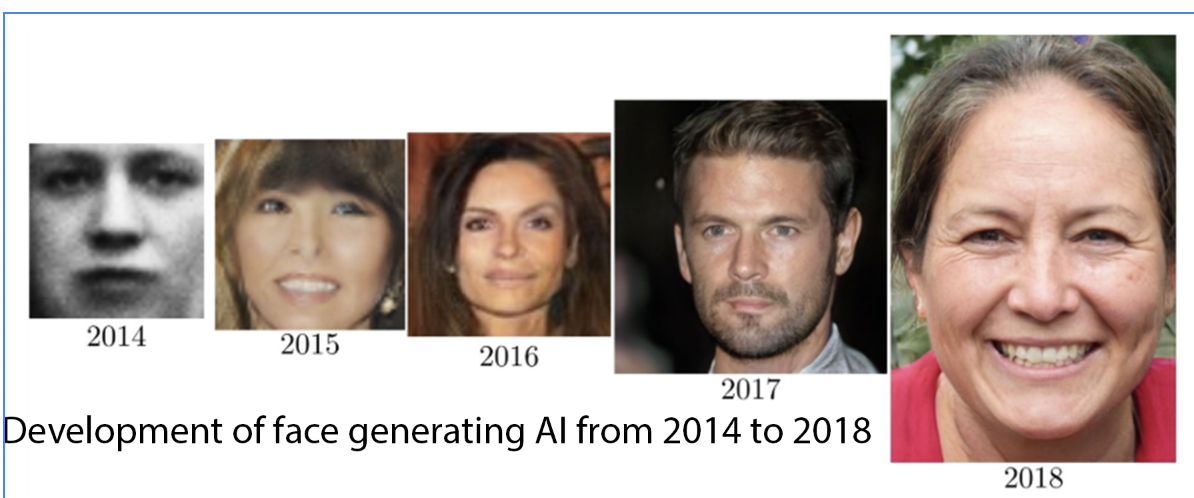

- AGI development is progressing rapidly, with the potential for human-level AI by 2027 and superintelligence shortly after. This is based on the consistent trend of ~0.5 orders of magnitude (OOMs) improvement per year in both compute power and algorithmic efficiency.

- The development of AGI could trigger an “intelligence explosion,” where AI systems capable of improving themselves lead to rapid, exponential growth in capabilities. This could compress a decade of progress into a single year.

- The “superalignment” problem – ensuring AI systems much smarter than humans remain controllable – remains unsolved and poses significant risks during rapid AI advancement.

- The race for AGI has significant geopolitical implications, with the potential to dramatically shift the balance of global power.

- Massive industrial mobilization, including significant increases in power generation and chip production, will be necessary to support AGI development. This could require investments on the scale of trillions of dollars.

Recommendations:

- Urgently improve security measures at AI labs to protect critical AGI breakthroughs and model weights from theft or espionage. This may require government involvement and the implementation of national security-level protocols.

- Accelerate research into AI alignment and safety, with a particular focus on solving the “superalignment” problem before the development of superintelligence.

- Prepare for a potential government-led “AGI Project” similar to the Manhattan Project, which would coordinate national resources and expertise towards safe AGI development.

- Develop a robust international framework for AGI governance, including non-proliferation agreements and benefit-sharing mechanisms, under the leadership of democratic nations.

- Invest heavily in the necessary infrastructure, particularly in power generation and chip production, to support large-scale AGI development within democratic countries.

Implications:

- If the report’s predictions are accurate and AGI is developed by the end of this decade, it could lead to unprecedented economic and societal disruption. Industries relying on cognitive labor may face rapid automation, potentially causing widespread unemployment and requiring a fundamental reimagining of economic structures and social safety nets.

- The geopolitical implications of AGI development could reshape the global order. If a single nation or alliance achieves a significant lead in AGI, it could result in a dramatic power imbalance, potentially leading to increased international tensions or conflicts. Conversely, if nations collaborate on AGI development and governance, it could foster a new era of global cooperation.

- The massive industrial mobilization required for AGI development, particularly in power generation and chip production, could accelerate technological progress in these sectors. This could have positive spillover effects for renewable energy adoption, computing capabilities in other fields, and overall economic growth.

Alternative perspectives:

- The report’s timeline for AGI development may be overly optimistic. Many AI researchers argue that significant conceptual breakthroughs, not just scaling of current technologies, are necessary for AGI. The path to AGI might be longer and more complex than the linear projections suggested in the report.

- The focus on a government-led “AGI Project” might underestimate the innovative potential of diverse, competitive private sector efforts. A more decentralized approach to AGI development could lead to more robust and safe outcomes by avoiding single points of failure and fostering a wider range of approaches.

- The report’s emphasis on national security and geopolitical competition in AGI development might overstate the zero-sum nature of the technology. AGI could potentially be a global public good, with its benefits more easily shared than traditional military technologies. This perspective might suggest a different approach to international cooperation and governance.

AI predictions:

- By 2026, we will see the first AI system capable of consistently outperforming human experts across a wide range of cognitive tasks, including scientific research and software engineering. This will lead to a surge in AI-driven discoveries and innovations across multiple fields.

- The development of AGI will not follow a smooth trajectory, but will instead be characterized by sudden breakthroughs interspersed with periods of apparent stagnation. This uneven progress will make it challenging to predict and prepare for AGI emergence, potentially leading to societal and economic shocks.

- International tensions over AGI development will escalate, leading to the formation of competing AI alliances by 2028. These alliances will differ in their approaches to AI safety, governance, and deployment, creating a new dimension of global politics centered around AI policy and ethics.

Glossary

- Situational awareness: The state of understanding the true implications and trajectory of AGI development, particularly among a small group of informed individuals in the AI field.

- AGI Realism: A perspective that acknowledges the imminent development of AGI, emphasizes its national security implications, advocates for American leadership, and recognizes the need for responsible development to mitigate risks.

- Superalignment: The technical challenge of ensuring that AI systems much smarter than humans remain controllable and aligned with human values.

- Unhobbling: The process of removing limitations or constraints on AI systems to unlock their latent capabilities, often through simple algorithmic improvements.

- The Project: A hypothetical future government-led initiative, similar to the Manhattan Project, to develop AGI under national security oversight.

- Intelligence explosion: A rapid, self-reinforcing cycle of AI improvement where AI systems capable of enhancing themselves lead to exponential growth in capabilities.

- Counting the OOMs: A method of projecting AI progress by tracking orders of magnitude (OOMs) improvements in compute power and algorithmic efficiency.

- Test-time compute overhang: The potential for AI systems to use significantly more computational resources during inference or deployment than during training, potentially leading to dramatic capability increases.

Members also get access to our comprehensive database of AI tools and fundraising

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!