- Publication: Meta

- Publication Date: July 23, 2024

- Organizations mentioned: Meta, OpenAI, Google, Anthropic, Nvidia

- Publication Authors: Llama team, Meta AI

- Technical background required: High

- Estimated read time (original text): 360 minutes

- Sentiment score: 75%, somewhat positive

TLDR:

Goal: Meta’s AI team has developed Llama 3, a new set of foundation models for language, to advance the field of artificial intelligence. This paper presents the development process, capabilities, and performance of Llama 3, which includes models ranging from 8B to 405B parameters, aiming to compete with leading language models like GPT-4 and Claude 3.5 Sonnet.

Methodology:

- Pre-training: Llama 3 was trained on a diverse dataset of approximately 15T multilingual tokens, with a focus on high-quality data curation and filtering.

- Post-training: The model underwent several rounds of supervised finetuning and direct preference optimization to improve its instruction-following abilities and align with human preferences.

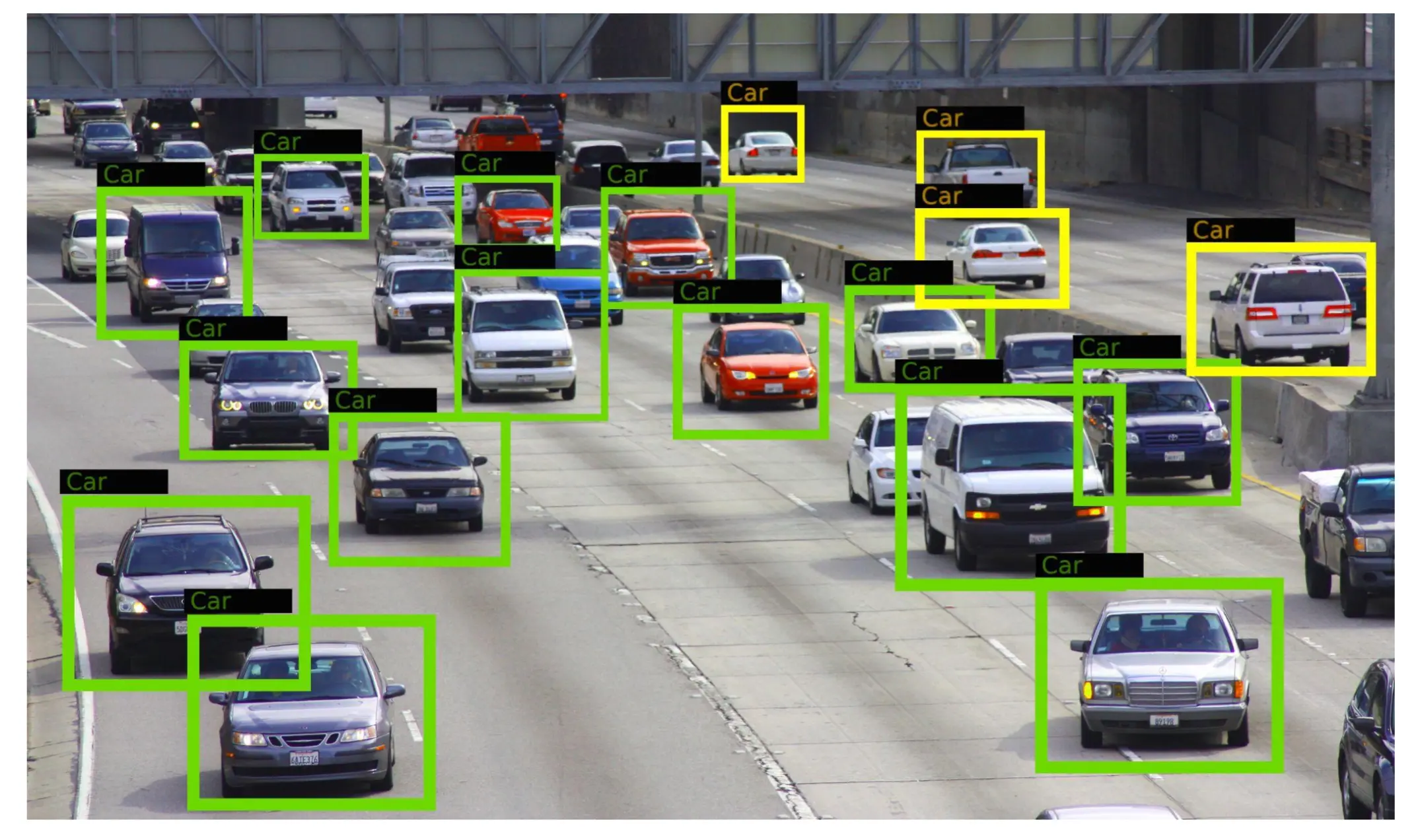

- Multimodal capabilities: Experiments were conducted to integrate image, video, and speech recognition capabilities using a compositional approach with separate encoders and adapters.

Key findings:

- Llama 3 405B demonstrates performance comparable to or exceeding that of GPT-4 and Claude 3.5 Sonnet on various benchmarks, including MMLU (Massive Multitask Language Understanding) and coding tasks.

- The model exhibits strong multilingual capabilities, supporting 34 languages for speech recognition and 8 core languages for general use.

- Llama 3 shows improved long-context understanding, with a context window of up to 128K tokens, enabling better performance on tasks requiring extensive information processing.

- The integration of tool use capabilities, such as web search and code execution, significantly expands the model’s problem-solving abilities.

- Safety measures, including input and output filtering with Llama Guard 3, have been implemented to mitigate potential risks associated with large language models.

- Experiments with multimodal capabilities show promising results, with Llama 3 performing competitively on image and video recognition tasks.

Recommendations:

- Further research should focus on improving the model’s performance on reasoning tasks and enhancing its multimodal capabilities, particularly in areas where it still lags behind human performance.

- Continued development of safety measures and ethical guidelines is crucial as these models become more powerful and widely adopted.

- The open-source release of Llama 3 should be leveraged by the research community to accelerate innovation and explore new applications of large language models.

- Organizations and professionals across various industries should prepare for the increasing integration of AI systems like Llama 3 in their workflows and decision-making processes.

- Ongoing evaluation and monitoring of Llama 3’s performance in real-world applications are necessary to identify areas for improvement and potential risks associated with its deployment.

Thinking Critically:

Implications:

- The release of Llama 3 and its open-source availability could accelerate the democratization of advanced AI technologies. This may lead to a surge in AI-powered applications across various industries, potentially disrupting traditional business models and creating new economic opportunities. However, it could also widen the gap between organizations that can effectively leverage these technologies and those that cannot, potentially leading to increased economic inequality.

- The multimodal capabilities of Llama 3, including image, video, and speech recognition, could revolutionize human-computer interaction. This may lead to more intuitive and accessible AI interfaces, potentially improving accessibility for individuals with disabilities and bridging language barriers. However, it also raises concerns about privacy and surveillance, as these technologies become more pervasive in daily life.

- The improved performance of Llama 3 on complex reasoning tasks and its ability to use external tools may significantly enhance decision-making processes in fields such as healthcare, finance, and scientific research. This could lead to more efficient resource allocation and accelerated innovation, but also raises ethical questions about the role of AI in critical decision-making and the potential for human deskilling.

Alternative perspectives:

- While the report emphasizes the strong performance of Llama 3 on various benchmarks, it’s important to note that these benchmarks may not fully represent real-world challenges. The model’s performance in controlled testing environments may not translate directly to practical applications, where context, nuance, and unpredictability play significant roles.

- The focus on scaling up model size and training data to achieve better performance may not be the most efficient or sustainable approach to AI development. Alternative approaches, such as more efficient architectures or training methods that require less computational resources, could potentially yield similar results while being more environmentally friendly and accessible to a wider range of researchers and organizations.

- The report’s emphasis on safety measures and ethical guidelines, while important, may not fully address the potential societal impacts of widespread AI adoption. There could be unforeseen consequences related to job displacement, information manipulation, or the concentration of power in the hands of those who control these technologies that are not adequately explored in the current research.

AI predictions:

- Within the next 2-3 years, we will see a significant increase in the number of AI-powered applications that can seamlessly integrate text, image, video, and speech inputs, leading to more natural and intuitive user interfaces across various devices and platforms.

- By 2026, large language models like Llama 3 will become a standard tool in scientific research, accelerating the pace of discoveries in fields such as drug discovery, materials science, and climate modeling. This will lead to a new paradigm of AI-assisted scientific inquiry.

- Over the next 5 years, we will witness the emergence of AI systems that can engage in long-term planning and complex problem-solving across multiple domains, challenging our current understanding of artificial general intelligence (AGI) and potentially leading to breakthroughs in areas such as autonomous systems and robotics.

Glossary:

- Llama 3: A set of foundation models for language that natively support multilinguality, coding, reasoning, and tool usage, developed by Meta’s AI team.

- Llama Guard 3: A system-level safety component designed to detect whether input prompts and/or output responses generated by language models violate safety policies on specific categories of harm.

- Prompt Guard: A model-based filter designed to detect prompt attacks, which are input strings designed to subvert the intended behavior of an LLM functioning as part of an application.

- Code Shield: An example of a class of system-level protections based on providing inference-time filtering, focusing on detecting the generation of insecure code.

- Llama 3-V: The version of Llama 3 with added visual recognition capabilities through a compositional approach.

- Quality-Tuning (QT): A process of training DPO models with a small but highly selective SFT dataset to improve response quality without affecting generalization.

- Llama 3 Herd: The collection of Llama 3 models with different parameter sizes and capabilities.

Members also get access to our comprehensive database of AI tools and fundraising

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!