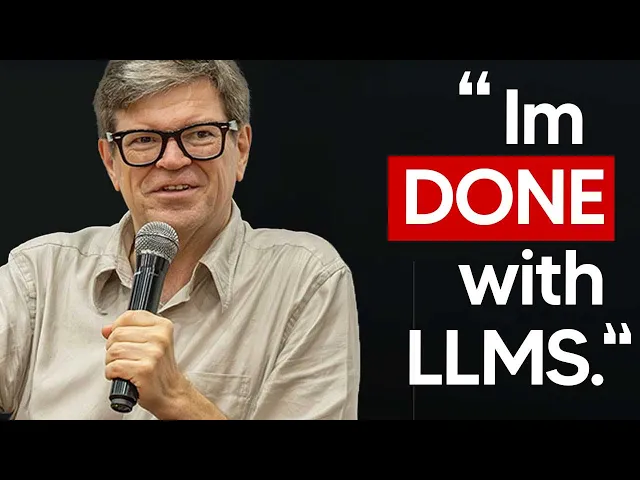

Metas AI Boss Says He DONE With LLMS…

Why Meta’s AI boss is ‘done’ with LLMs – and what might replace them

When someone who’s been at the forefront of AI research for decades says they’re “not so interested in LLMs anymore,” it’s worth paying attention. That’s exactly what Yann LeCun, Meta’s Chief AI Scientist and one of the godfathers of modern AI, declared at Nvidia’s GTC 2025 conference recently.

In a tech landscape where large language models (LLMs) like ChatGPT and Claude dominate headlines and investment dollars, LeCun’s statement might seem surprising. But his reasoning offers a fascinating glimpse into where AI might be headed next.

Four areas that matter more than LLMs

According to LeCun, LLMs are now mostly “in the hands of industry product people” who are making incremental improvements with more data and computing power. Instead, he’s focused on four more fundamental challenges:

- Understanding the physical world – How machines can grasp the real, physical environment around them

- Persistent memory – How AI systems can maintain and utilize long-term information

- Reasoning – Moving beyond the “simplistic” reasoning of current LLMs

- Planning – Enabling AI to formulate and execute complex plans

“I’m excited about things that a lot of people in this community might get excited about five years from now,” LeCun explained, suggesting his interests have already moved beyond where most of the industry is focused today.

Why text alone can’t lead to general AI

LeCun highlighted a fundamental limitation of current AI: LLMs are built around predicting discrete tokens (words or word parts), which just isn’t how the physical world works.

While token prediction works well for text, it falls short when dealing with the continuous, high-dimensional nature of the physical world. As he put it

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...