Meta Finally Revealed The Truth About LLAMA 4

# Meta’s Llama 4 Release: Behind the Drama and Benchmarks

The recent release of Meta’s Llama 4 language model has been accompanied by controversy and questions about its actual capabilities versus its marketed performance. This blog post examines the situation and provides insights into what might be happening behind the scenes.

## The Missing Technical Paper

One of the first red flags was Meta releasing Llama 4 without a technical paper – an unusual move for a major AI model release. Without transparency into the model’s architecture, training methods, and techniques, some critics suspect Meta may have overfitted the model to perform well on benchmark tests but not in real-world applications.

## Internal Turmoil at Meta?

An anonymous post from someone claiming to be inside Meta suggested their AI team was in “panic mode” following the release of DeepSeek V3, a model from a relatively unknown Chinese company. According to this source:

– DeepSeek V3 was developed with just a $5.5 million training budget

– Meta’s leadership was concerned about justifying the enormous costs of their AI division

– The compensation for individual AI leaders at Meta often exceeds what it cost to train DeepSeek’s entire model

– The organization may have been artificially inflated with people wanting to join the high-profile AI team

## Benchmark Discrepancies

AI professor Ethan Molik identified apparent differences between the Llama 4 version used for benchmark testing and the one released to the public. His comparison of answers shows the benchmark version providing much more comprehensive responses than the publicly available model.

The controversy deepened when users noticed discrepancies between the “Llama 4 Maverick Experimental” version (possibly used for benchmarks) and the released “Llama 4 Maverick” model, with the experimental version consistently producing longer, more detailed responses.

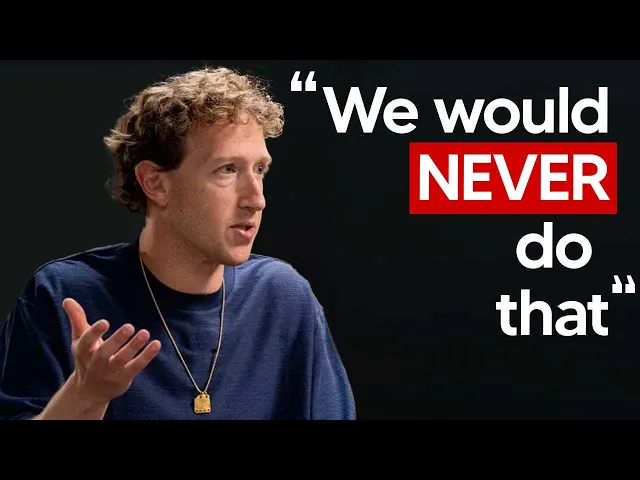

## Meta’s Response

Meta has acknowledged the reports of “mixed quality across different services” but attributed this to implementation issues rather than model performance:

– They denied training on test sets: “We simply would never do that”

– They stated that variable quality is due to needing to “stabilize implementations”

– They affirmed belief that Llama 4 represents “a significant advancement”

## Independent Analysis

Artificial Analysis

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...