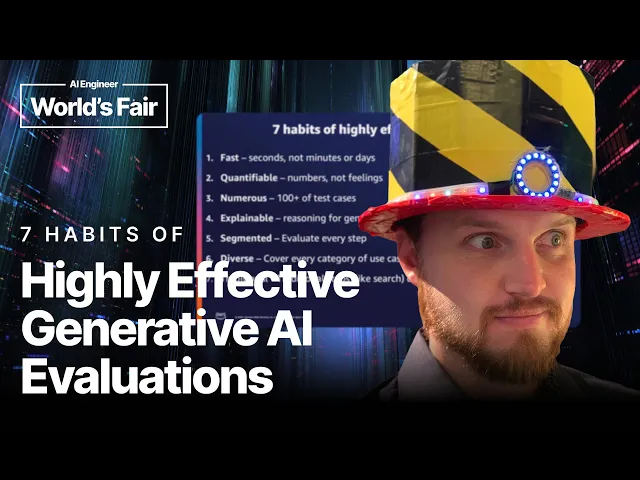

7 Habits of Highly Effective Generative AI Evaluations

Effective AI evaluation habits that matter now

In the rapidly evolving landscape of artificial intelligence, Justin Muller's presentation on the "7 Habits of Highly Effective Generative AI Evaluations" offers a timely framework for organizations navigating the complex process of assessing generative AI tools. As businesses increasingly adopt AI solutions, the ability to properly evaluate these technologies becomes not just advantageous but essential for making sound investments. Muller's methodical approach cuts through the hype to provide practical guidance for anyone tasked with determining which AI tools will actually deliver business value.

Key Points

- Begin with clear business objectives rather than being distracted by flashy demos or technical specifications; evaluation should always connect back to specific organizational needs

- Design comprehensive test cases that cover a variety of real-world scenarios your business faces, including edge cases that might reveal limitations

- Implement systematic evaluation processes with consistent metrics and scoring frameworks to enable objective comparisons between different AI solutions

The Critical Need for Structured Evaluation

Perhaps the most insightful aspect of Muller's presentation is his emphasis on structured evaluation methodologies. In an industry dominated by marketing hype and technical jargon, his approach grounds the evaluation process in business reality. This matters tremendously because organizations are making significant investments in AI technologies without necessarily having the frameworks to determine if these investments will yield returns.

The context here is crucial: Gartner estimates that through 2025, 80% of enterprises will have established formal accountability metrics for their AI initiatives. Yet many organizations still approach AI evaluation in an ad hoc manner, leading to misaligned expectations and disappointing outcomes. Muller's framework provides a counterbalance to the tendency to be swayed by impressive demos or technical specifications that may have little relevance to actual business applications.

Beyond the Presentation: Practical Implementation

What Muller's presentation doesn't fully address is how different industries might need to adapt these evaluation habits. For example, healthcare organizations evaluating generative AI need to place significant emphasis on compliance with regulations like HIPAA and FDA guidelines. Their test cases must extensively verify that patient data remains protected and that AI outputs maintain clinical accuracy. Financial institutions, meanwhile, need to prioritize evaluation of model explainability and audit trails to satisfy regulatory requirements.

A case study worth considering is how Microsoft implemente

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...