- Publication: Dale on AI

- Publication Date: May 6, 2021

- Organizations mentioned: Google, University of Toronto, OpenAI, HuggingFace, Anthropic

- Publication Authors: Dale Markowitz

- Technical background required: Medium

- Estimated read time (original text): 12 minutes

- Sentiment score: 85%, very positive

Transformers are a revolutionary neural network architecture that has transformed natural language processing and beyond. The article explains how Transformers work, why they’re more efficient than previous approaches like RNNs, and their impact on modern AI applications through models like BERT and GPT-3.

TLDR

Dale Markowitz authored this educational guide to help technical professionals understand Transformers, the revolutionary AI architecture introduced in 2017 that powers modern language models like GPT-3 and BERT. The article serves as an accessible introduction to how Transformers work and why they’ve become fundamental to modern AI applications.

Teaching Approach:

- Builds understanding by comparing Transformers to previous technologies (Recurrent Neural Networks) and breaking down complex concepts into digestible components

- Uses real-world examples and analogies to illustrate technical concepts (like explaining language translation and word context)

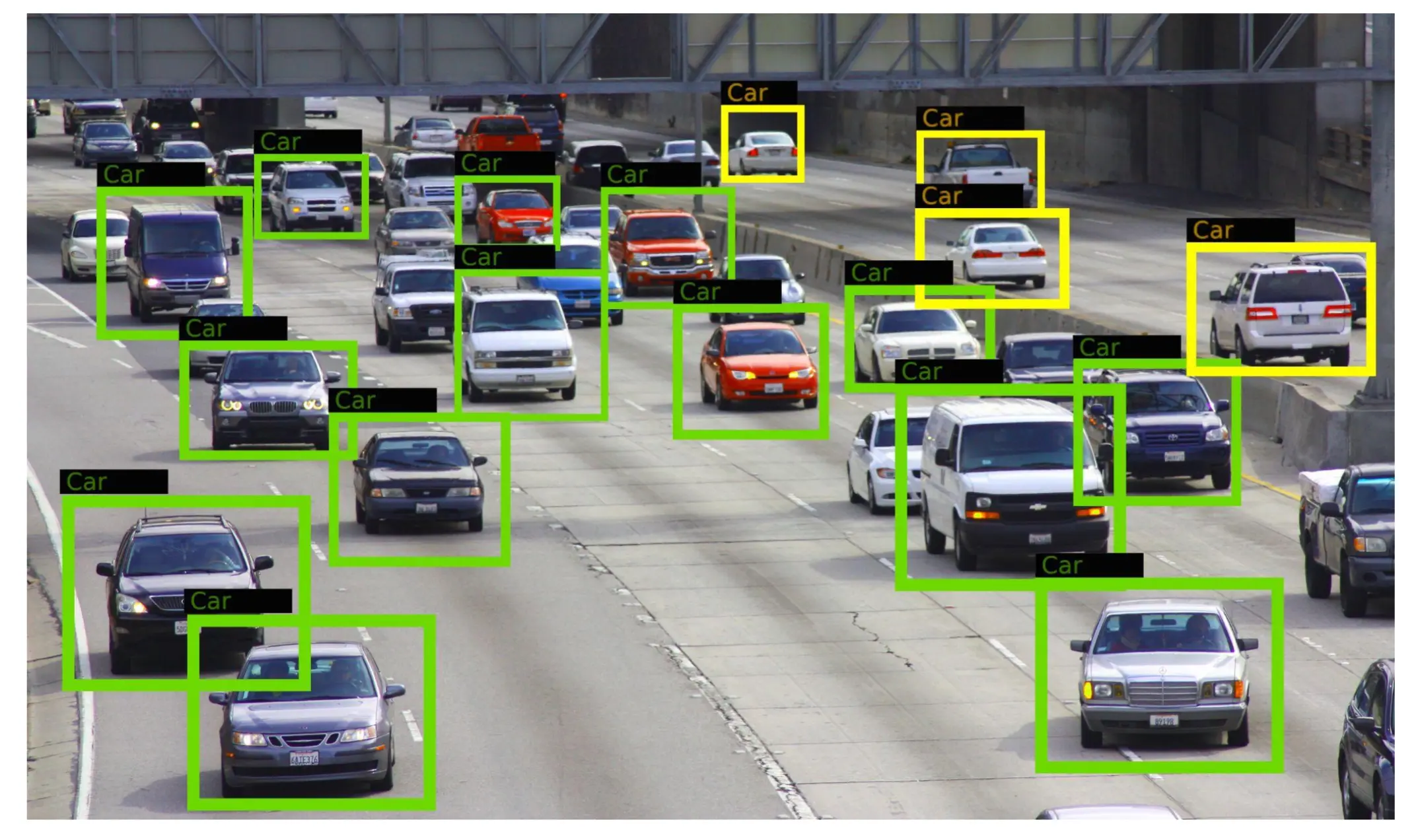

- Provides visual aids and practical examples to reinforce learning concepts

Key Concepts:

- Transformers solved a fundamental limitation in AI language processing: the ability to handle text in parallel rather than sequentially. This breakthrough allows for much faster training and the ability to process massive datasets using multiple processors.

- The architecture introduces three key innovations:

- Positional encodings (a clever way to help AI understand word order)

- Attention (allowing AI to focus on relevant words, similar to how humans process language)

- Self-attention (helping AI understand words in context, like distinguishing between “server” as a waiter versus a computer)

- The technology’s impact extends beyond just language processing:

- Powers versatile tools like BERT for tasks including summarization and classification

- Enables new applications in music composition and protein structure prediction

- Demonstrates consistent improvements with scale (larger models + more data = better results)

- Major implementations like GPT-3 (trained on 45TB of text) showcase the architecture’s potential when trained on massive datasets.

Practical Applications:

- Developers can access pre-built Transformer models through platforms like TensorFlow Hub or HuggingFace, making implementation accessible

- The technology has proven particularly valuable for:

- Text summarization

- Question answering

- Classification tasks

- Named entity resolution

- Language translation

- Organizations can apply Transformer-based solutions to various business problems, particularly those involving language processing or generation

- The architecture’s versatility makes it valuable across multiple domains, from scientific research to creative applications

- Understanding Transformers is becoming increasingly important for technical professionals as these models continue to drive innovations in AI

Thinking Critically

Implications:

- The democratization of powerful language AI through tools like HuggingFace and TensorFlow Hub could lead to a proliferation of AI-powered applications across industries, potentially transforming how businesses handle text processing, customer service, and content creation.

- As Transformer models continue to demonstrate success across diverse domains (from protein folding to music composition), we may see an acceleration in scientific discoveries and creative applications, fundamentally changing how humans approach complex problems.

- The increasing importance of understanding Transformer architecture could create a new divide between organizations and professionals who grasp these concepts and those who don’t, similar to how computer literacy became a crucial skill in the 1980s.

Alternative Perspectives:

- While the article presents Transformers as a universal solution, their heavy computational requirements and need for massive datasets might make them impractical for many real-world applications, especially for smaller organizations or specific use cases where simpler solutions might suffice.

- The focus on scaling (bigger models, more data) as the path to improvement might be unsustainable, both environmentally due to energy consumption and practically due to the finite nature of high-quality training data.

- The article’s optimistic view of Transformer applications across domains might overlook fundamental limitations in their ability to truly understand context and meaning, rather than just identifying statistical patterns in data.

Future Developments:

- We’ll likely see the emergence of more efficient Transformer architectures that can achieve similar results with smaller models and less training data, making the technology more accessible to smaller organizations.

- The success of Transformers in various domains will likely inspire new hybrid architectures that combine their strengths with other AI approaches, potentially leading to more robust and versatile AI systems.

- As Transformer-based models become more integrated into everyday applications, we’ll see increased focus on making them more interpretable and controllable, rather than just more powerful.

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!