- Publication: arXiv

- Publication Date: April 13, 2022

- Organizations mentioned: Ludwig Maximilian University of Munich & IWR, Heidelberg University, Runway ML

- Publication Authors: Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, Björn Ommer

- Technical background required: High

- Estimated read time (original text): 30 minutes

- Sentiment score: 61%, somewhat positive (100% being most positive)

In the dynamic realm of AI, generating high-resolution images has been a resource-intensive task, often limited to those with access to powerful computational resources. Prior to this study, the field relied on frameworks like generative adversarial networks (GANs) and autoregressive models for image generation, which, despite their capabilities, faced issues such as training instability and hefty resource demands.

Addressing these challenges, this study introduces a groundbreaking method using Latent Diffusion Models (LDMs)—a novel AI technique that synthesizes images by learning a compressed, essential representation of visual data, generating detailed images with much less computational power. This approach broadens access to advanced image synthesis for practical business applications, offering cost-effective solutions in marketing, virtual design, and other industries, thereby democratizing the use of sophisticated visual AI technologies.

TLDR

Goal: The primary aim of the research was to address the challenge of high computational costs associated with generating detailed and complex images through AI. By employing Latent Diffusion Models (LDMs), the study sought to reduce the reliance on expensive hardware, making the process more accessible and environmentally friendly. This approach aimed to strike a balance between minimizing resource usage and maintaining the quality and flexibility of image synthesis. In a digital age where visual content is king, this research has the potential to revolutionize industries by enabling the creation of high-fidelity images with reduced carbon footprints and lower barriers to entry, making the technology viable for businesses of all sizes.

Methodology:

- The authors analyze existing Diffusion Models (DMs) and identify a two-stage learning process: perceptual compression followed by semantic compression.

- They train an autoencoder to create a lower-dimensional latent space that is perceptually equivalent to the original image space, reducing computational complexity.

- Diffusion models are then trained within this latent space, leveraging the inductive biases of the UNet architecture.

Key findings:

- Latent Diffusion Models (LDMs) achieve state-of-the-art results in image inpainting and class-conditional image synthesis with significantly reduced computational costs.

- LDMs outperform pixel-based DMs in various tasks, including text-to-image synthesis, unconditional image generation, and super-resolution.

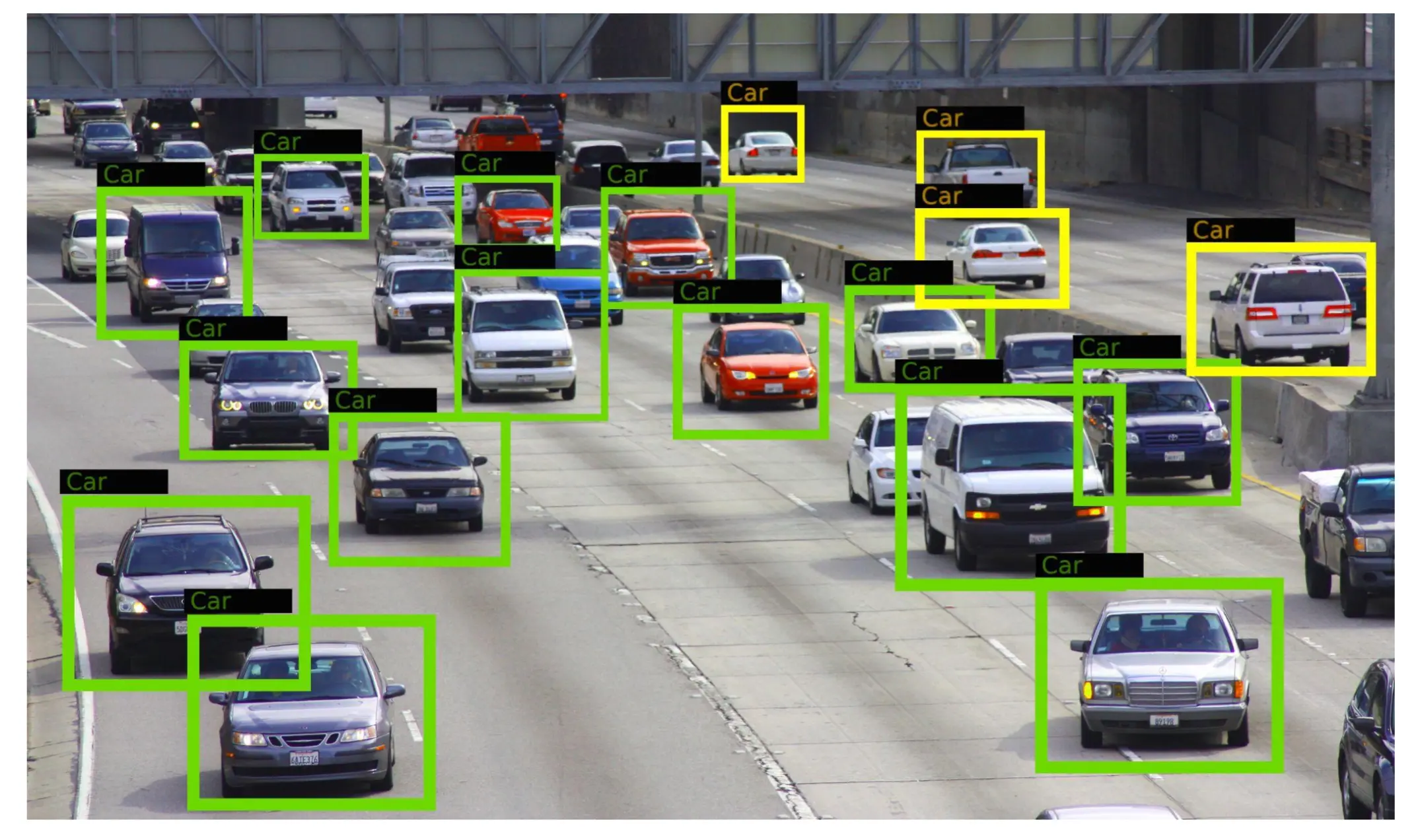

- Cross-attention layers in the LDM architecture allow for flexible conditioning inputs like text or bounding boxes, enabling high-resolution synthesis in a convolutional manner.

- LDMs trained in VQ-regularized latent spaces sometimes produce better sample quality than those trained in continuous spaces.

- The models can generate consistent images up to ~1024² pixels and can be applied to densely conditioned tasks in a convolutional fashion.

Recommendations:

- Utilize LDMs for efficient high-resolution image synthesis, especially when computational resources are limited.

- Apply cross-attention based conditioning to enable multi-modal training and control over the image generation process.

- Consider using LDMs for tasks that benefit from the mode-covering behavior of likelihood-based models, such as diverse image synthesis without mode collapse.

- Train the universal autoencoding stage once and reuse it for multiple DM trainings or different tasks to further reduce computational demands.

- Explore the potential of LDMs in various domains beyond image synthesis, such as audio or complex structured data, leveraging their general-purpose nature.

Thinking Critically

Implications:

- Adoption of LDMs could significantly reduce computational resource requirements for high-resolution image synthesis, democratizing access to state-of-the-art generative models for a wider range of researchers and practitioners. This could lead to an acceleration of innovation and creative applications in fields such as graphic design, entertainment, and data augmentation for machine learning.

- The ability of LDMs to perform various tasks including text-to-image synthesis, super-resolution, and inpainting with competitive performance could encourage a shift away from task-specific models to more versatile and efficient general-purpose models. This could streamline workflows and reduce the need for specialized hardware, potentially lowering barriers to entry for startups and smaller institutions.

- The environmental impact of training large generative models could be mitigated by the widespread adoption of LDMs, as they require significantly fewer GPU days for training. This could contribute to more sustainable practices in the AI industry and align with growing concerns about the carbon footprint of machine learning operations.

Alternative perspectives:

- The findings are based on the assumption that the quality of image synthesis does not significantly degrade with the use of LDMs. However, there may be edge cases or specific applications where the detail preservation of LDMs does not meet the necessary standards, potentially limiting their applicability.

- While LDMs offer reduced computational requirements, it is possible that future advancements in hardware and optimization algorithms could negate this advantage. As such, the long-term impact of LDMs might be less significant if more efficient training methods for pixel-based models are developed.

- The report assumes that LDMs can be a one-size-fits-all solution for various image synthesis tasks. However, there might be scenarios where specialized models outperform LDMs, especially in tasks where fine-grained control over the generation process is crucial.

AI predictions:

- Within the next few years, LDMs or similar models that optimize computational efficiency will become the standard for image synthesis tasks, largely replacing pixel-based models in both academic research and industry applications.

- As LDMs become more accessible, there will be an increase in user-generated content and creative applications, leading to new forms of digital art and expression that leverage text-to-image and other generative capabilities.

- The success of LDMs in image synthesis will inspire the development of analogous models for other data modalities, such as video and 3D models, further expanding the capabilities and applications of generative AI.

Figures

Glossary

- Latent Diffusion Models (LDMs): A new class of generative models that enable DM training on limited computational resources while retaining quality and flexibility, by applying diffusion models in the latent space of powerful pretrained autoencoders.

- Perceptual compression: The stage in learning where high-frequency details are removed but little semantic variation is learned.

- Semantic compression: The stage in learning where the actual generative model learns the semantic and conceptual composition of the data.

- Cross-attention layers: Layers introduced into the model architecture to turn diffusion models into powerful and flexible generators for general conditioning inputs such as text or bounding boxes.

- Class-conditional image synthesis: A task where models generate images conditioned on class labels.

- Text-to-image synthesis: A task where models generate images based on textual descriptions.

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!