- Publication: Google

- Publication Date: August 2, 2023

- Organizations mentioned: Google Brain, Google Research, University of Toronto

- Publication Authors: Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin

- Technical background required: High

- Estimated read time (original text): 30 minutes

- Sentiment score: 65%

In the rapidly evolving field of Artificial Intelligence (AI), the Transformer model represents a paradigm shift in sequence transduction tasks such as language translation. Prior to its introduction, recurrent neural networks (RNNs)—which process data sequences step-by-step like a conveyor belt—and convolutional neural networks (CNNs)—which are structured like a multi-layered filter system for data—were the go-to frameworks, often coupled with attention mechanisms to enhance performance.

However, these models had limitations, particularly in their ability to parallelize operations, which is crucial for processing efficiency and speed. The Transformer model sidesteps these constraints by relying solely on attention mechanisms, significantly speeding up training without compromising on quality. For business professionals, this advancement means faster, more accurate translations, and the potential for real-time multilingual communication.

The study aimed to develop a new neural network architecture that could outperform existing models in quality and efficiency for machine translation tasks. The goal was to create a model that could be trained more quickly and parallelized more effectively, thereby reducing computational costs and time. This was set against the backdrop of existing models that were hampered by sequential computation, which limited their ability to scale with sequence length and required extensive training time.

Methodology

Transformer Architecture Development

- Objective: To design a novel neural network architecture that eliminates the need for recurrent or convolutional layers in favor of an attention-based system.

- Method: The Transformer model was developed with an encoder-decoder structure, using stacked self-attention and point-wise, fully connected layers. The key innovation was the use of multi-head attention mechanisms that allow the model to process different parts of the sequence in parallel, significantly improving efficiency.

- Connection to Goal of the Study: This approach directly addressed the goal by providing a more parallelizable architecture, reducing training time from weeks to mere hours on standard hardware, and improving the quality of translations as measured by BLEU scores, a metric for evaluating machine-translated text against a reference translation.

Training Data and Batching

- Objective: To efficiently train the Transformer model using a large dataset to ensure high-quality machine translation.

- Method: The model was trained on the WMT 2014 English-German and English-French translation tasks using byte-pair encoding for efficient tokenization. Data was batched by sequence length to optimize training, with each batch containing approximately 25,000 source and target tokens.

- Connection to Goal of the Study: Effective training on a large dataset enabled the Transformer to learn a wide variety of language patterns and nuances, crucial for achieving superior translation quality compared to existing models.

Optimizer and Regularization Techniques

- Objective: To fine-tune the Transformer model for optimal performance using advanced optimization and regularization strategies.

- Method: The Adam optimizer with specific hyperparameters and a custom learning rate schedule was used to train the model. Techniques like residual dropout and label smoothing were employed to prevent overfitting and improve model generalization.

- Connection to Goal of the Study: These techniques ensured that the Transformer model not only learned efficiently but also produced more reliable and generalizable translations, contributing to its state-of-the-art performance.

Key Findings & Results: The Transformer model achieved unprecedented results, surpassing the performance of all previous models on English-to-German and English-to-French translation tasks. It achieved a BLEU score of 28.4 and 41.8 respectively, setting new state-of-the-art records. The model also demonstrated superior parallelization capabilities, requiring significantly less training time. The study’s findings confirmed that the Transformer model could generalize well to other tasks, such as English constituency parsing, showcasing its versatility and robustness.

Applications:

- Real-time Translation Services: The Transformer can be used to power real-time translation services, providing accurate and instantaneous translations for global communication.

- Content Localization: Media and content creators can utilize the Transformer to localize content quickly, making it accessible to a wider audience in their native languages.

- Customer Support Automation: Businesses can deploy the Transformer in customer support chatbots to offer multilingual assistance, improving customer experience and reach.

- Language Learning Tools: Educational technology can leverage the Transformer to create more effective language learning tools that provide immediate, natural translations.

- Intelligent Assistants: The Transformer can enhance the language understanding capabilities of intelligent assistants, making them more responsive and capable of handling complex, context-rich interactions.

Broader Implications

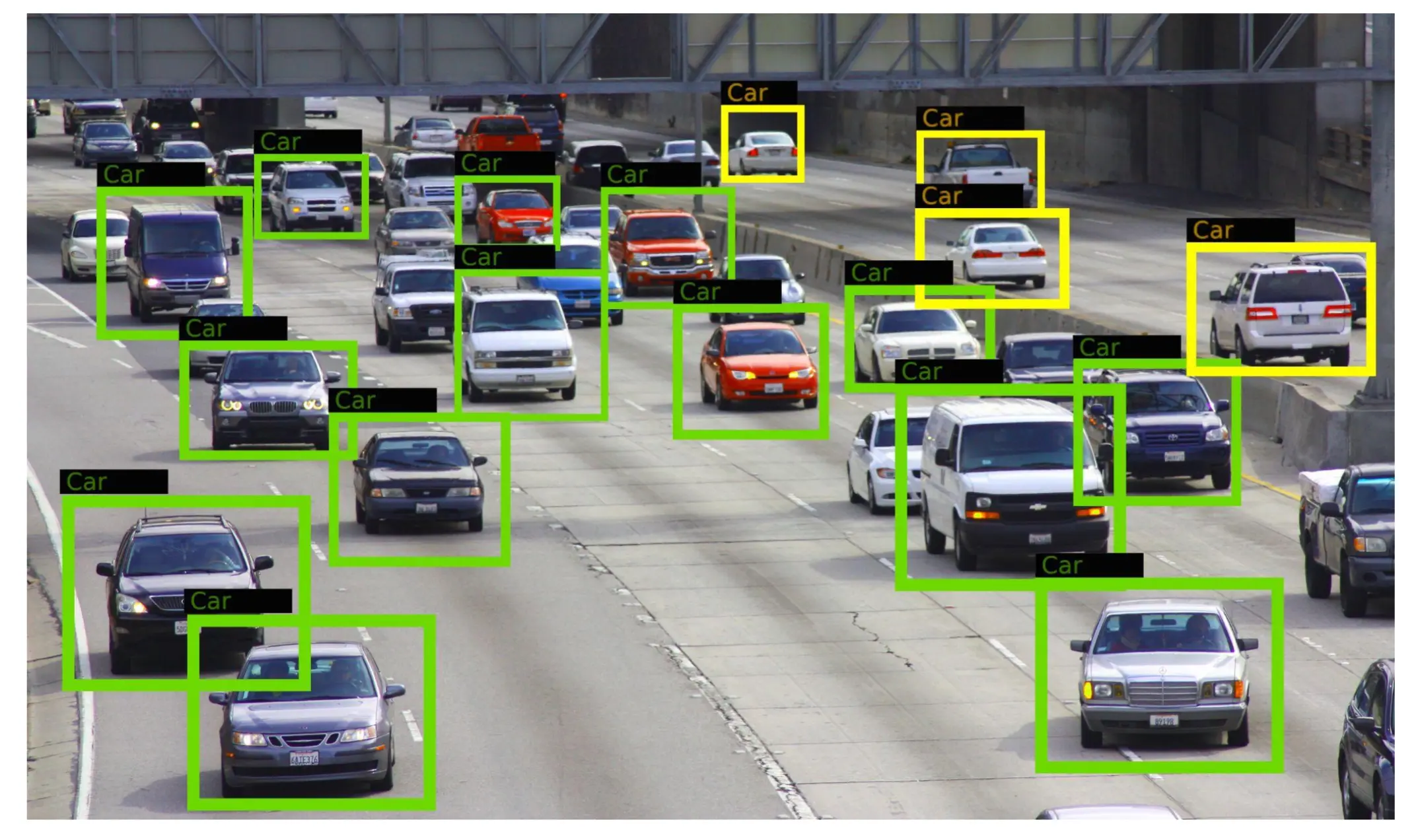

The Transformer model’s ability to process sequences in parallel significantly reduces the computational resources required for AI tasks, making advanced AI more accessible and sustainable. By setting new benchmarks in translation quality, the Transformer paves the way for seamless and natural cross-cultural communication, potentially fostering greater global collaboration and understanding. Its success may also encourage further research into attention-based architectures, leading to breakthroughs in other AI domains such as image and video processing.

Limitations & Future Research Directions:

- The Transformer’s reliance on attention mechanisms may lead to challenges in interpreting the model’s decision-making processes, necessitating further research into model explainability.

- While the Transformer has shown impressive results in language tasks, its applicability and performance in other domains of AI remain to be fully explored.

- Future advancements may focus on improving the Transformer’s efficiency and scalability, particularly for tasks involving extremely long sequences or high-dimensional data.

Figures

Glossary

- Recurrent Neural Networks (RNNs): A type of neural network where connections between nodes form a directed graph along a temporal sequence, allowing it to exhibit temporal dynamic behavior and use its internal state (memory) to process sequences of inputs.

- Convolutional Neural Networks (CNNs): A class of deep neural networks, most commonly applied to analyzing visual imagery, that use a mathematical operation called convolution over the input data.

- BLEU Score: A method for evaluating the quality of text that has been machine-translated from one language to another.

- Attention Mechanism: A process in AI that allows the model to focus on specific parts of the input data, similar to how humans pay more attention to certain aspects of what they see or hear.

- Parallel Processing: A method of computation where many calculations or processes are carried out simultaneously, much like a team working on different tasks at the same time.

- Optimizer: A mathematical tool used in AI to improve the learning process of models during training.

- Regularization: A technique in machine learning that prevents the model from overfitting, which occurs when a model learns the training data too well and performs poorly on new data.

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!