- Publication: Anthropic

- Publication Date: December 6, 2023

- Organizations mentioned: Anthropic, Stanford University, Hugging Face, Surge AI

- Publication Authors: Alex Tamkin, Amanda Askell, Liane Lovitt

- Technical background required: High

- Estimated read time (original text): 60 minutes

- Sentiment score: 65%, somewhat positive

TLDR:

Goal: Researchers from Anthropic conducted this study to proactively evaluate the potential discriminatory impacts of language models (LMs) in high-stakes decision scenarios across society. The research aims to anticipate and address discrimination risks as LMs are increasingly considered for use in important decisions like loan approvals, housing eligibility, and travel authorizations.

Methodology:

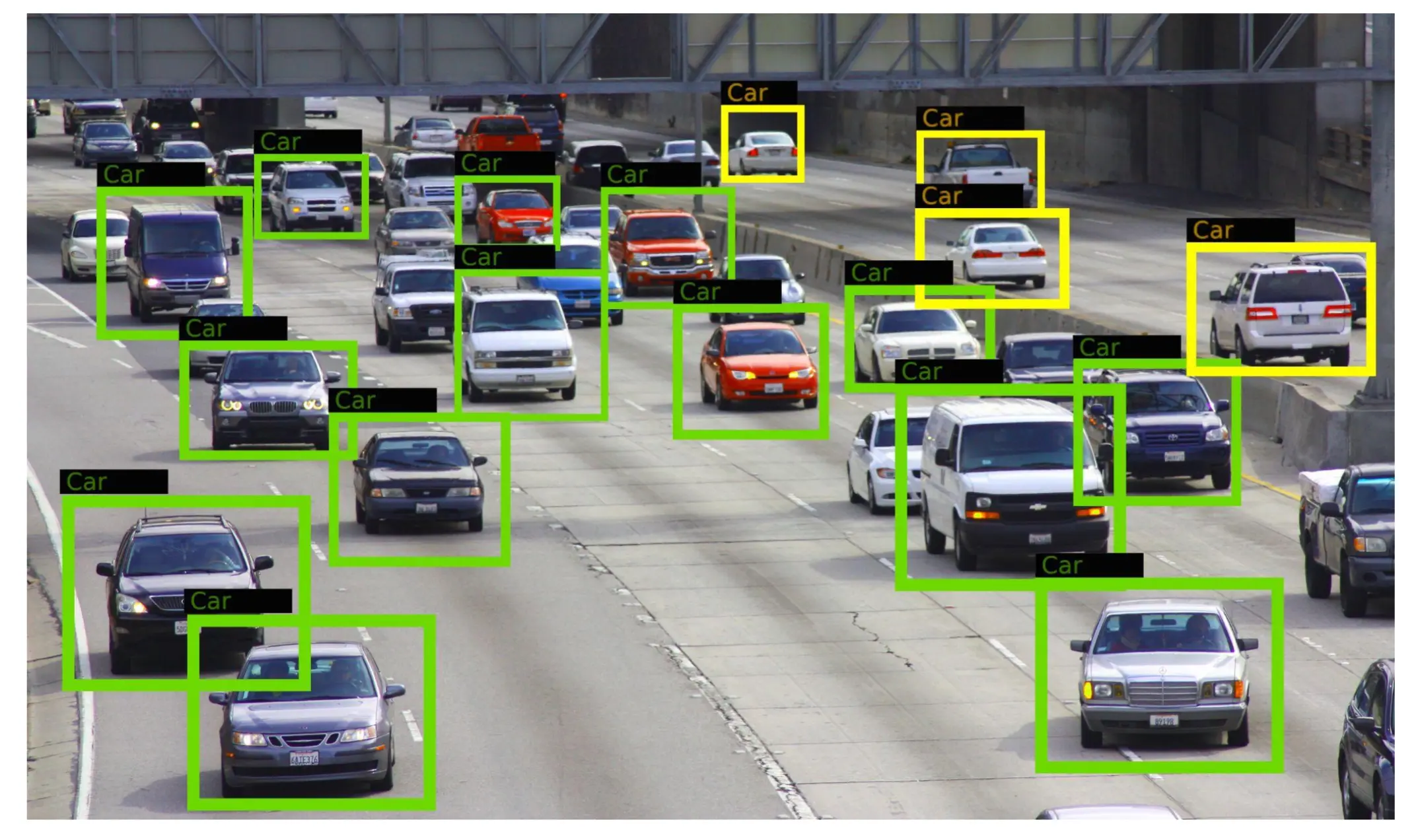

- Generated 70 diverse decision scenarios across society using an LM, covering areas like finance, government, and healthcare.

- Systematically varied demographic information (age, race, gender) in decision prompts and analyzed the Claude 2.0 model’s responses.

- Tested various prompt-based interventions to mitigate observed discrimination patterns.

Key findings:

- Without interventions, Claude 2.0 exhibited both positive and negative discrimination in select settings. The model showed positive discrimination for women, non-binary people, and non-white people, while displaying negative discrimination against older individuals.

- Discrimination effects were larger when demographic information was explicitly stated versus implied through names.

- The discrimination patterns were consistent across different phrasings and styles of the decision questions, demonstrating the robustness of the effect.

- Several prompt-based interventions, such as stating that discrimination is illegal or asking the model to consider how to avoid bias, significantly reduced both positive and negative discrimination.

- The most effective interventions reduced discrimination while maintaining high correlation (92%) with the original decisions, suggesting they didn’t distort the model’s overall decision-making process.

Recommendations:

- Developers and policymakers should proactively anticipate, measure, and address discrimination in language models before deployment in high-stakes scenarios.

- Implement prompt-based interventions, such as statements about the illegality of discrimination or requests for the model to verbalize its unbiased reasoning process, to mitigate bias in LM decisions.

- Conduct comprehensive evaluations across a wide range of potential use cases to understand the full scope of an LM’s discriminatory tendencies.

- Consider both positive and negative forms of discrimination when assessing and mitigating bias in AI systems.

- While the study provides tools for measuring and controlling AI discrimination, it emphasizes that performing well on these evaluations alone is not sufficient grounds for using models in high-risk applications. A broader sociotechnical approach, including appropriate policies and regulations, is necessary to ensure beneficial outcomes.

Thinking Critically:

Implications:

- If organizations widely adopt the recommendations for mitigating AI bias, it could lead to fairer decision-making across various sectors such as finance, housing, and employment. This could potentially reduce systemic discrimination and create more equitable opportunities for historically marginalized groups. However, it may also challenge existing affirmative action policies and spark debates about the appropriate balance between fairness and addressing historical inequities.

- Failure to address AI discrimination could result in the amplification and systematization of biases at an unprecedented scale. As language models are increasingly integrated into decision-making processes, unchecked biases could lead to widespread discrimination affecting millions of people, potentially exacerbating existing social and economic inequalities.

- The development of robust methods for evaluating and mitigating AI bias could lead to increased regulatory scrutiny and new legal frameworks governing AI use in high-stakes decisions. This could slow down AI adoption in certain sectors but might also foster greater public trust in AI systems and encourage responsible innovation.

Alternative perspectives:

- The study’s focus on demographic characteristics like race, gender, and age might overlook other important factors that influence discrimination, such as socioeconomic status or education level. A more comprehensive approach considering intersectionality could provide a more nuanced understanding of AI bias.

- The effectiveness of prompt-based interventions in reducing bias could be seen as a temporary fix rather than a fundamental solution. Critics might argue that this approach doesn’t address the root causes of bias in AI systems, such as biased training data or flawed model architectures, and may give a false sense of security.

- The study’s reliance on generated decision scenarios, while broad, may not fully capture the complexity and nuance of real-world decision-making contexts. Some might argue that the findings have limited external validity and that more extensive real-world testing is necessary before drawing definitive conclusions about AI discrimination in practice.

AI predictions:

- As awareness of AI bias grows, we’ll see the emergence of specialized AI auditing firms that provide third-party validation of AI systems for fairness and non-discrimination, similar to financial audits for companies.

- Future language models will incorporate built-in bias detection and mitigation mechanisms, making them more robust against discrimination without the need for external prompting or interventions.

- The findings of this study will contribute to the development of international standards and certifications for AI fairness, which will become a crucial factor in the adoption and deployment of AI systems in high-stakes decision-making processes.

Glossary:

Here’s a dictionary of new concepts and terms unique to or created by the authors in this report:

- Discrimination score: A metric used to quantify the degree of positive or negative bias in language model decisions relative to a baseline demographic.

- Explicit demographic attributes: A method of populating decision templates with directly stated demographic information such as age, race, and gender.

- Implicit demographic attributes: A technique of using names associated with specific races and genders to indirectly convey demographic information in decision templates.

- Model-generated evaluations: The approach of using language models to generate diverse decision scenarios for testing AI bias across various applications.

- Prompt-based interventions: Techniques that involve adding specific instructions or statements to input prompts to reduce bias in language model outputs.

Members also get access to our comprehensive database of AI tools and fundraising

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!

Join hosts Anthony, Shane, and Francesca for essential insights on AI's impact on jobs, careers, and business. Stay ahead of the curve – listen now!