Artificial intelligence safety experts have conducted the first comprehensive safety evaluation of leading AI companies, revealing significant gaps in risk management and safety measures across the industry.

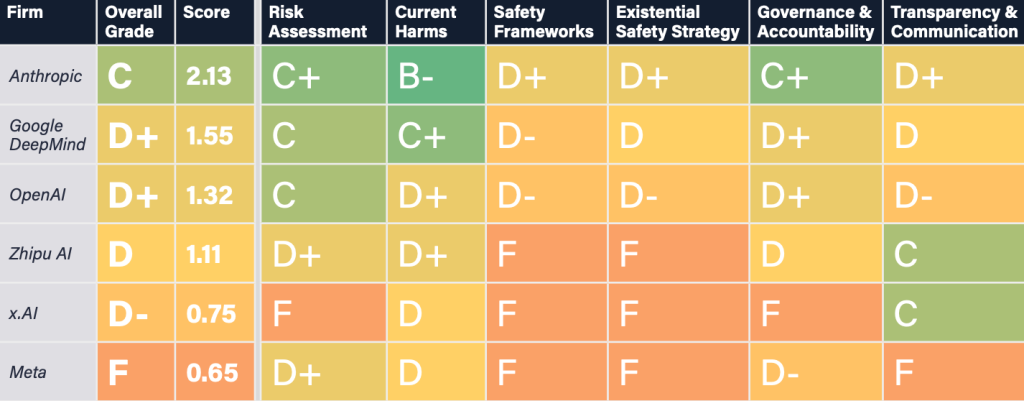

Key findings and scope: The Future of Life Institute‘s 2024 AI Safety Index evaluated six major AI companies – Anthropic, Google DeepMind, Meta, OpenAI, x.AI, and Zhipu AI – across multiple safety dimensions.

- The assessment covered six key categories: Risk Assessment, Current Harms, Safety Frameworks, Existential Safety Strategy, Governance & Accountability, and Transparency & Communication

- The evaluation used a standard US GPA grading system, ranging from A+ to F

- Companies were assessed based on publicly available information and their responses to a survey conducted by FLI

- Access the FLI AI Safety Index 2024 here

Critical concerns identified: The expert panel discovered widespread vulnerabilities and inadequate safety protocols across all evaluated companies.

- All flagship AI models were found to be susceptible to adversarial attacks

- None of the companies demonstrated adequate strategies for maintaining human control over advanced AI systems

- Competitive pressures were identified as a key factor driving companies to bypass crucial safety considerations

Expert perspectives: Leading AI researchers emphasized the gravity of the findings and the importance of safety accountability.

- Stuart Russell, UC Berkeley Professor of Computer Science, highlighted that current safety measures lack quantitative guarantees and may represent a technological dead end

- Yoshua Bengio, Turing Award winner and Mila founder, stressed the importance of such evaluations for promoting accountability and responsible development

- MIT Professor Max Tegmark emphasized the significance of the expert panel’s decades of combined experience in AI risk assessment

Review panel composition: The evaluation was conducted by a diverse group of respected AI experts and thought leaders.

- The panel included prominent figures such as Turing Award winner Yoshua Bengio and Stuart Russell

- Reviewers represented various institutions including UC Berkeley, Université de Montreal, Carnegie Mellon University, and youth AI advocacy organizations

- The panel combined academic expertise with practical experience in AI development and safety

Looking ahead: Implications for AI governance: The findings underscore the urgent need for improved safety measures and accountability in AI development, while raising questions about the viability of current technological approaches to ensuring AI safety.

Recent Stories

DOE fusion roadmap targets 2030s commercial deployment as AI drives $9B investment

The Department of Energy has released a new roadmap targeting commercial-scale fusion power deployment by the mid-2030s, though the plan lacks specific funding commitments and relies on scientific breakthroughs that have eluded researchers for decades. The strategy emphasizes public-private partnerships and positions AI as both a research tool and motivation for developing fusion energy to meet data centers' growing electricity demands. The big picture: The DOE's roadmap aims to "deliver the public infrastructure that supports the fusion private sector scale up in the 2030s," but acknowledges it cannot commit to specific funding levels and remains subject to Congressional appropriations. Why...

Oct 17, 2025Tying it all together: Credo’s purple cables power the $4B AI data center boom

Credo, a Silicon Valley semiconductor company specializing in data center cables and chips, has seen its stock price more than double this year to $143.61, following a 245% surge in 2024. The company's signature purple cables, which cost between $300-$500 each, have become essential infrastructure for AI data centers, positioning Credo to capitalize on the trillion-dollar AI infrastructure expansion as hyperscalers like Amazon, Microsoft, and Elon Musk's xAI rapidly build out massive computing facilities. What you should know: Credo's active electrical cables (AECs) are becoming indispensable for connecting the massive GPU clusters required for AI training and inference. The company...

Oct 17, 2025Vatican launches Latin American AI network for human development

The Vatican hosted a two-day conference bringing together 50 global experts to explore how artificial intelligence can advance peace, social justice, and human development. The event launched the Latin American AI Network for Integral Human Development and established principles for ethical AI governance that prioritize human dignity over technological advancement. What you should know: The Pontifical Academy of Social Sciences, the Vatican's research body for social issues, organized the "Digital Rerum Novarum" conference on October 16-17, combining academic research with practical AI applications. Participants included leading experts from MIT, Microsoft, Columbia University, the UN, and major European institutions. The conference...