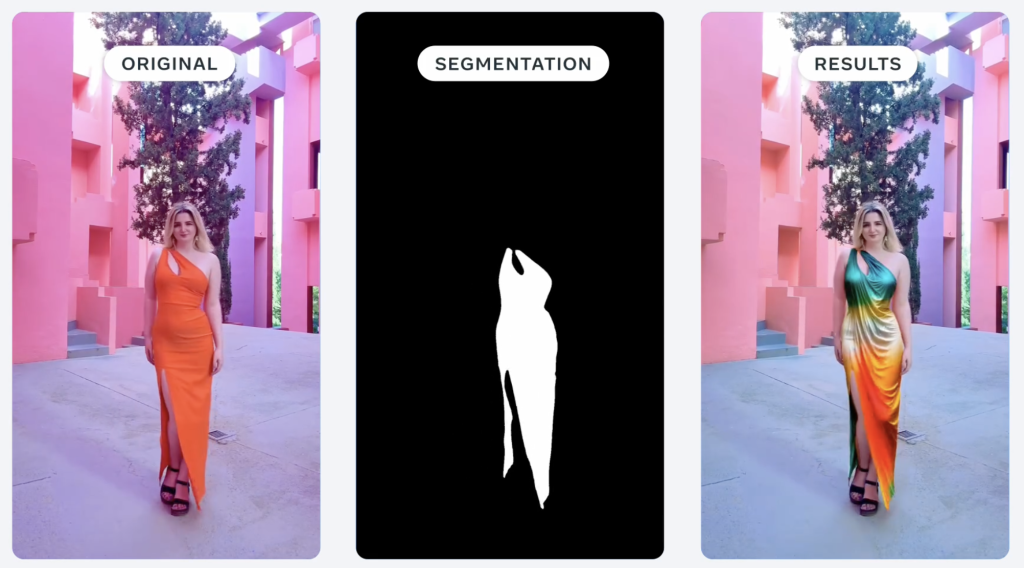

In a recent blog post on Meta, digital artist Josephine Miller demonstrated how Meta’s Segment Anything 2 model is enabling real-time virtual fashion transformations.

The innovation: Using Meta’s Segment Anything 2 (SAM 2) model and other AI tools, London-based XR creative designer Josephine Miller creates videos where clothing appears to change colors and patterns instantly.

- Miller showcased the technology in an Instagram post featuring a gold evening gown that transforms through various designs and colors

- The project aims to demonstrate how digital fashion can reduce reliance on fast fashion while promoting sustainability

- The process combines ComfyUI, an open source stable diffusion model, with Meta’s SAM 2 for precise object segmentation in videos

Technical implementation: Miller’s workflow requires significant computing power and expertise in multiple AI technologies to achieve seamless virtual clothing transformations.

- She built a custom computer with an RTX 4090 GPU to handle the processing demands

- The workflow integrates ComfyUI programming with SAM 2’s segmentation capabilities

- SAM 2 builds upon its predecessor by extending segmentation features to both images and videos

Evolution of the technology: Meta’s SAM technology has significantly improved the efficiency and accuracy of object segmentation in digital content.

- Prior to SAM’s 2023 release, object segmentation was time-consuming and often imprecise

- The technology now enables both interactive and automatic object segmentation

- Miller reports that SAM has dramatically improved her output quality and workflow efficiency

Creator’s journey: Miller’s expertise in AI-powered creative tools developed through self-directed learning during the COVID-19 pandemic.

- She began experimenting with AI during lockdown in 2020

- Her learning process started with text-to-image generation before advancing to video models

- After two months of experimentation, she developed her current workflow

- Her expertise has led to collaborations with global brands in augmented, virtual, and mixed reality

Future implications: While current hardware requirements limit widespread adoption, the technology shows promise for democratizing creative digital fashion applications.

- The process currently requires high-end hardware like an RTX 4090 GPU

- Miller envisions broader adoption of open source models like SAM for creative applications

- The technology could help reshape how people interact with fashion in digital spaces while promoting sustainable consumption practices

Looking ahead: As hardware capabilities improve and AI tools become more accessible, technologies like SAM 2 may bridge the gap between traditional fashion consumption and digital expression, potentially catalyzing a shift toward more sustainable fashion practices in both virtual and physical spaces.

Recent Stories

DOE fusion roadmap targets 2030s commercial deployment as AI drives $9B investment

The Department of Energy has released a new roadmap targeting commercial-scale fusion power deployment by the mid-2030s, though the plan lacks specific funding commitments and relies on scientific breakthroughs that have eluded researchers for decades. The strategy emphasizes public-private partnerships and positions AI as both a research tool and motivation for developing fusion energy to meet data centers' growing electricity demands. The big picture: The DOE's roadmap aims to "deliver the public infrastructure that supports the fusion private sector scale up in the 2030s," but acknowledges it cannot commit to specific funding levels and remains subject to Congressional appropriations. Why...

Oct 17, 2025Tying it all together: Credo’s purple cables power the $4B AI data center boom

Credo, a Silicon Valley semiconductor company specializing in data center cables and chips, has seen its stock price more than double this year to $143.61, following a 245% surge in 2024. The company's signature purple cables, which cost between $300-$500 each, have become essential infrastructure for AI data centers, positioning Credo to capitalize on the trillion-dollar AI infrastructure expansion as hyperscalers like Amazon, Microsoft, and Elon Musk's xAI rapidly build out massive computing facilities. What you should know: Credo's active electrical cables (AECs) are becoming indispensable for connecting the massive GPU clusters required for AI training and inference. The company...

Oct 17, 2025Vatican launches Latin American AI network for human development

The Vatican hosted a two-day conference bringing together 50 global experts to explore how artificial intelligence can advance peace, social justice, and human development. The event launched the Latin American AI Network for Integral Human Development and established principles for ethical AI governance that prioritize human dignity over technological advancement. What you should know: The Pontifical Academy of Social Sciences, the Vatican's research body for social issues, organized the "Digital Rerum Novarum" conference on October 16-17, combining academic research with practical AI applications. Participants included leading experts from MIT, Microsoft, Columbia University, the UN, and major European institutions. The conference...