AI code is here. We need to be responsible with it.

AI generates broken code and costs thousands

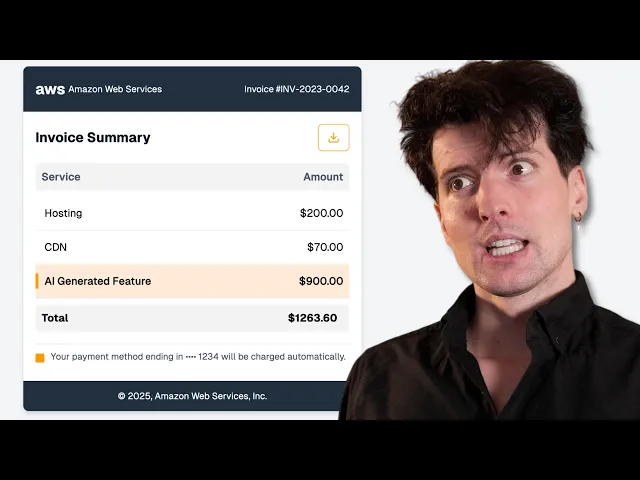

In today's increasingly AI-assisted development world, we're facing a new kind of challenge: code that looks right but silently creates expensive problems. Last week's viral tweet perfectly illustrates this danger – an AI assistant named Devon added a single event to a component that triggered 6.6 million events in one week, resulting in a surprise $733 analytics bill.

Key Points:

- AI-generated code often lacks crucial context awareness, leading to expensive errors that human reviewers might miss

- Traditional code review practices are struggling to keep pace with the volume of AI-generated code being committed

- The increasing ease of writing code has inversely affected our tolerance for the tedium of reviewing it

- Usage-based pricing models can magnify minor code errors into major financial problems

Why Human Review Still Matters

The most insightful takeaway from this incident is how profoundly AI is changing the developer workflow balance. Pre-AI, developers spent approximately two-thirds of their time writing code and one-third reviewing it. As AI tools like GitHub Copilot and Cursor AI dramatically accelerate code generation, this ratio has flipped – or at least it should have.

This shift is happening against a backdrop of human psychology where our tolerance for tedious tasks decreases as our tools become more powerful. When AI makes writing code feel effortless, the relatively unchanged task of code review feels increasingly burdensome by comparison. Yet this is precisely when we need more review, not less.

The implications for the industry are significant. Teams that maintain rigorous code review cultures will have a competitive advantage over those that rush AI-generated code into production. Companies with strong review practices will experience fewer outages, lower unexpected costs, and higher customer trust.

Solutions Beyond the Obvious

While the Devon incident focuses attention on the importance of code review, there are additional approaches that weren't covered in the video that can help prevent similar problems:

AI-specific testing harnesses: Consider developing specialized test environments that specifically measure the resource usage patterns of new code. For analytics events, this could mean creating ephemeral test environments that track event emission rates and alert on anomalous patterns before deployment.

Rate limiting by default: Implement system-wide rate limiting on API calls, database writes, and third-party service usage. This creates a safety valve

Recent Videos

How To Earn MONEY With Images (No Bullsh*t)

Smart earnings from your image collection In today's digital economy, passive income streams have become increasingly accessible to creators with various skill sets. A recent YouTube video cuts through the hype to explore legitimate ways photographers, designers, and even casual smartphone users can monetize their image collections. The strategies outlined don't rely on unrealistic promises or complicated schemes—instead, they focus on established marketplaces with proven revenue potential for image creators. Key Points Stock photography platforms like Shutterstock, Adobe Stock, and Getty Images remain viable income sources when you understand their specific requirements and optimize your submissions accordingly. Specialized marketplaces focusing...

Oct 3, 2025New SHAPE SHIFTING AI Robot Is Freaking People Out

Liquid robots will change everything In the quiet labs of Carnegie Mellon University, scientists have created something that feels plucked from science fiction—a magnetic slime robot that can transform between liquid and solid states, slipping through tight spaces before reassembling on the other side. This technology, showcased in a recent YouTube video, represents a significant leap beyond traditional robotics into a realm where machines mimic not just animal movements, but their fundamental physical properties. While the internet might be buzzing with dystopian concerns about "shape-shifting terminators," the reality offers far more promising applications that could revolutionize medicine, rescue operations, and...

Oct 3, 2025How To Do Homeless AI Tiktok Trend (Tiktok Homeless AI Tutorial)

AI homeless trend raises ethical concerns In an era where social media trends evolve faster than we can comprehend them, TikTok's "homeless AI" trend has sparked both creative engagement and serious ethical questions. The trend, which involves using AI to transform ordinary photos into images depicting homelessness, has rapidly gained traction across the platform, with creators eagerly jumping on board to showcase their digital transformations. While the technical process is relatively straightforward, the implications of digitally "becoming homeless" for entertainment deserve careful consideration. The video tutorial provides a step-by-step guide on creating these AI-generated images, explaining how users can transform...